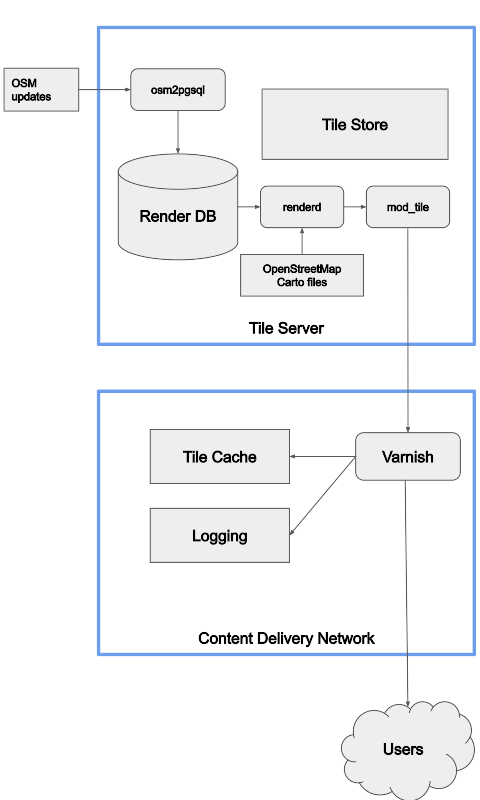

The Standard Tile Layer has a lot of traffic. On August 1st, a typical day, it had 2.8 billion requests served by Fastly, about 32 thousand a second. The challenges of scaling to this size are documented elsewhere, and we handle the traffic reliably, but something we don’t often talk about is the logging. In some cases, you could log a random sample of requests but that comes with downsides like obscuring low frequency events, and preventing some kinds of log analysis. Critically, we publish data that depends on logging all requests.

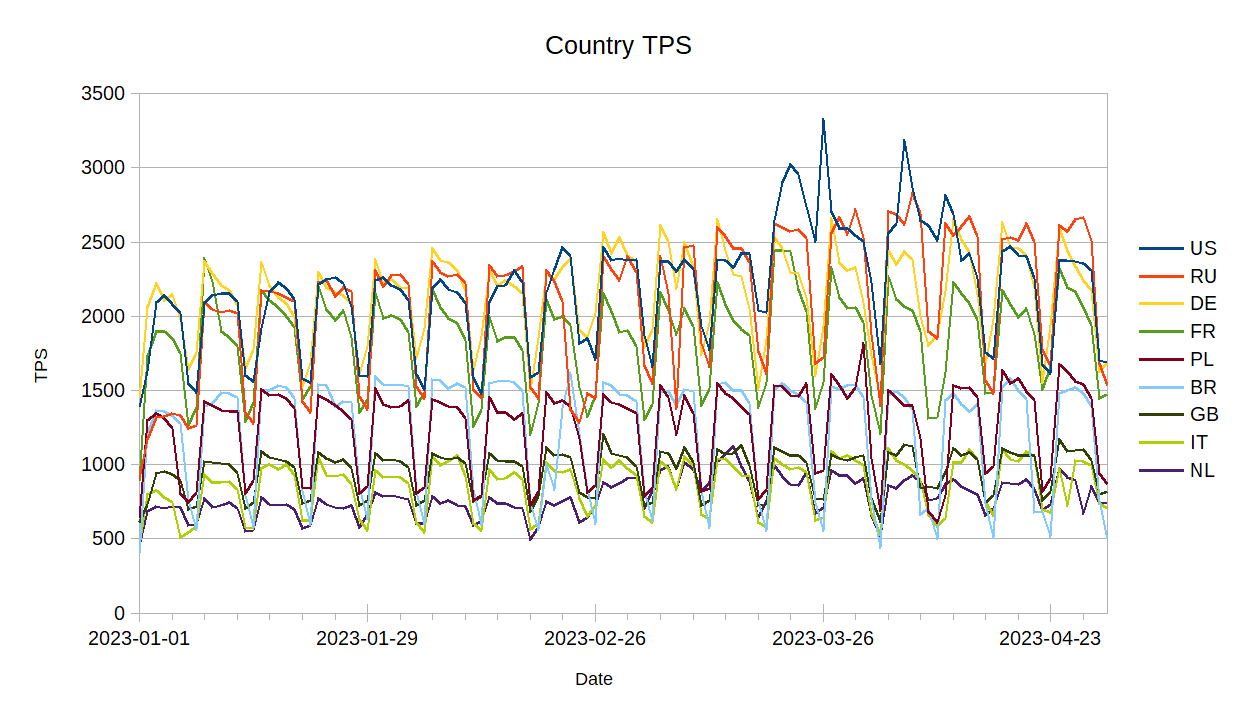

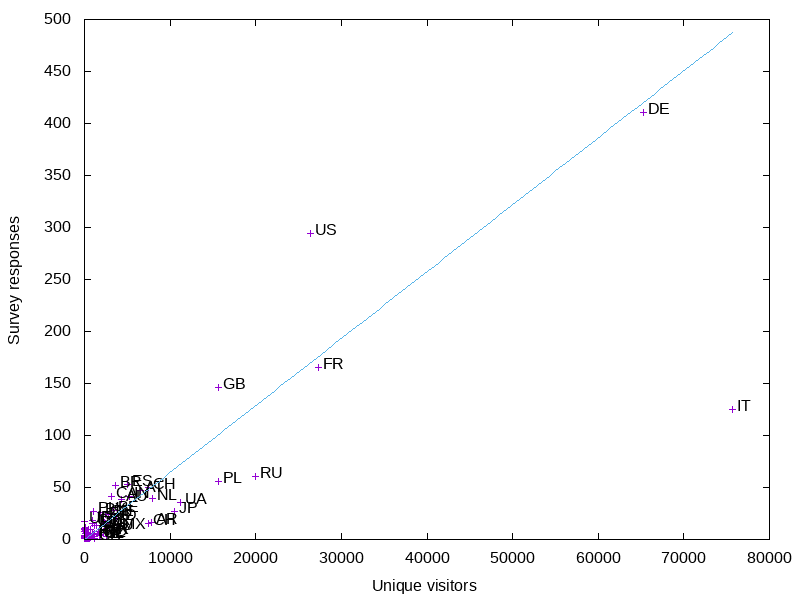

We query our logs with Athena, a hosted version of Presto, a SQL engine that, among features, can query files on an object store like S3. Automated queries are run with tilelog, which publishes files daily to generate published files on usage of the standard tile layer.

As you might imagine, 2.8 billion requests is a lot of log data. Fastly offers a number of logging options, and we publish compressed CSV logs to Amazon S3. These logs are large, and suffer a few problems for long-term use because they:

- contain personal information like request details and IPs, that, although essential for running the service, cannot be retained forever;

- contain invalid requests, making analysis more difficult;

- are large, being 136 GB/day; and

- become slow to query, being compressed gzip files with the only indexing being the date and hour of the request, which is part of the file path.

To solve these problems we reformat, filter, and aggregate logs which lets us delete old logs. We’ve done the first two for some time, and are now doing the third.